Blog

Secure Code Reviews in 2026

Understanding AI’s strengths and limits

Alex Mercer

Dec 12, 2025

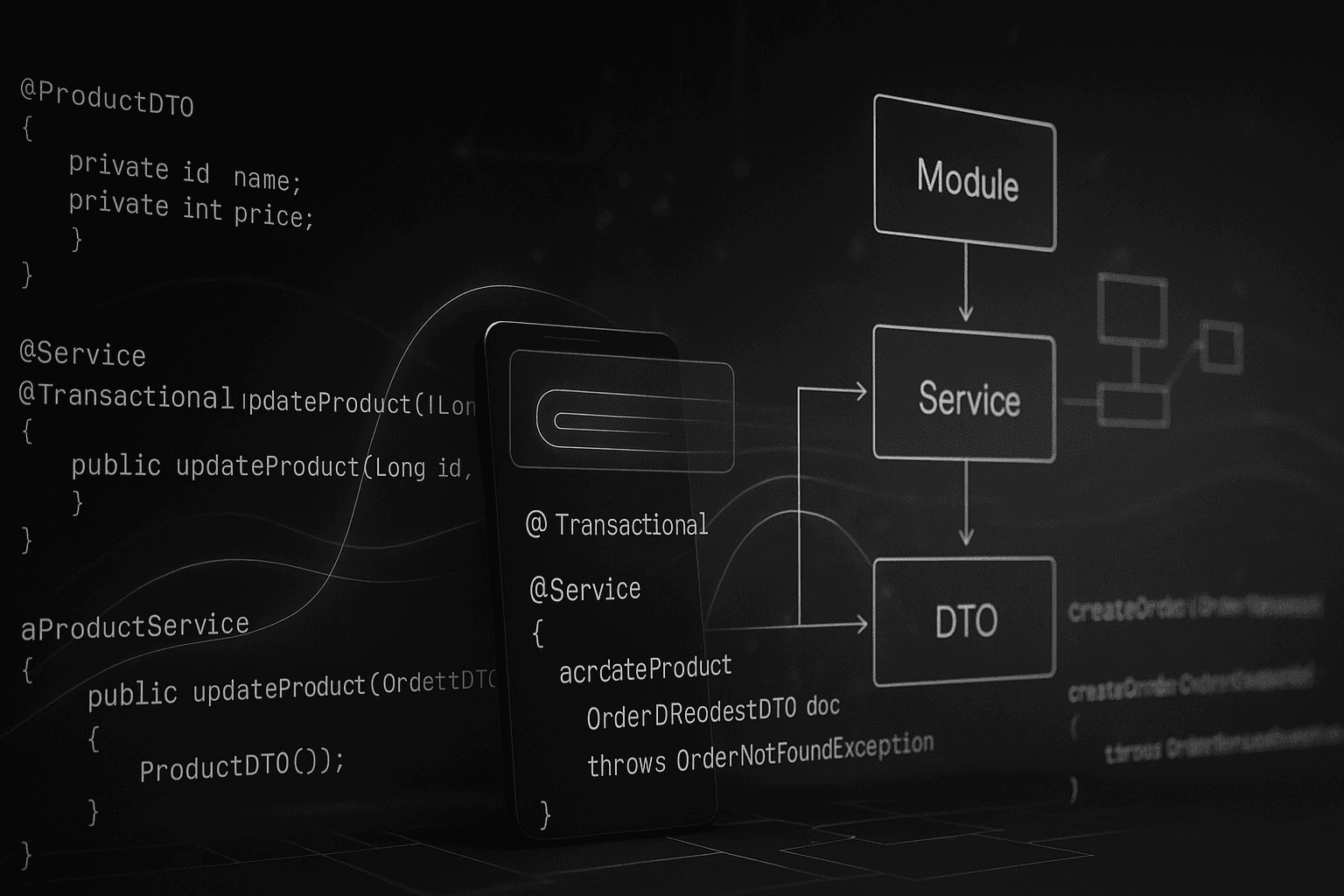

AI-assisted security tools are increasingly being used to scan code for vulnerabilities, helping developers catch common issues like unsafe functions, weak encryption, or missing validations. But real-world vulnerabilities rarely live in isolation. They hide in cross-module interactions, framework configurations, and multi-step logic that automated scanners struggle to interpret.

That gap is why secure code review in 2026 requires more than signature checks or generic AI suggestions. Teams need clarity on what AI can reliably detect, and where human judgment, domain context, and deeper analysis are still essential.

Key takeaways

As per Veracode State of Software Security report, over 74% of applications had at least one security flaw in their latest scan, highlighting how widespread vulnerabilities remain even when automated scans are part of the workflow.

AI-assisted tools can analyze patterns, dependencies, and code structure to flag higher-risk issues, but false positives remain a challenge.

Combining static analysis, automated tests, and context-aware AI reviews ensures deeper coverage of business logic, multi-module impacts, and potential security flaws.

Context-aware AI tools like cubic reduce noise and highlight actionable risks, helping teams focus on genuine security concerns.

What is a secure code review?

A secure code review identifies vulnerabilities, logic flaws, and misconfigurations in software before deployment. It ensures coding guidelines, security standards, and architectural principles are consistently applied. Enterprise Java applications, with multiple modules, dependencies, and critical business workflows, require reviews that go beyond surface-level checks to catch hidden risks.

Secure code review checklist for 2026

Before diving into AI capabilities, it’s helpful to outline what a secure code review should cover. Key items include:

Area | Checks |

Input validation | Ensure all user inputs are sanitized to prevent SQL injection, XSS, or command injection. |

Authentication & authorization | Verify correct implementation of login flows, role checks, and access restrictions. |

Dependency management | Check third-party libraries for known vulnerabilities and outdated versions. |

Error handling & logging | Confirm sensitive data is not exposed in logs, and errors are handled safely. |

Cryptography | Validate encryption standards for data at rest and in transit. |

Configuration & environment | Review environment-specific settings, secrets management, and service accounts. |

Test coverage | Ensure unit, integration, and security-focused tests cover critical paths and edge cases. |

What can AI reliably catch in a secure code review?

AI-assisted security reviews excel at identifying repetitive patterns and common vulnerabilities, especially those that follow known signatures or security rules. Examples include:

Injection flaws such as SQL injection or XSS, where user input is improperly sanitized.

Unsafe deserialization and other serialization vulnerabilities that could be exploited remotely.

Dependency misconfigurations flagged in vulnerability databases or outdated library versions.

Authentication and authorization gaps that follow common misconfiguration patterns.

Logging and error-handling inconsistencies that might leak sensitive data.

According to a 2023 report by CodeAnt, AI platforms can automatically detect over 70% of low-to-medium severity security vulnerabilities in enterprise codebases.

Example: AI can detect a potentially risky business logic flow, such as a multi-step transaction where a discount override in one module could be applied incorrectly if a prior validation in another module fails. Even if each module individually passes standard checks, AI can flag this cross-module pattern as high-risk because it deviates from historical safe patterns observed across previous PRs.

What does AI often miss in a secure code review?

Despite these strengths, AI still struggles with context-specific and workflow-dependent risks. These require understanding how the application behaves across modules, runtime states, or business logic. Examples include:

Business logic vulnerabilities: AI may not detect steps that are skipped or executed out of sequence, leading to unintended consequences.

Multi-step or cross-module issues: Vulnerabilities can arise from interactions between services or modules, which AI may see as separate changes.

Complex cryptography misuse: Subtle errors like incorrect key lengths, improper padding, or context-specific encryption flaws may go unnoticed.

Non-standard access control flaws: Custom implementations of roles and permissions may bypass standard AI detection rules.

Example: In a multi-module order processing system:

// Payment DTO with new field public class PaymentDTO { private BigDecimal discountAmount; // new field } // Payment service – validation not updated yet BigDecimal total = dto.getAmount().subtract(dto.getDiscountAmount()); // Could throw NullPointerException or bypass discount validation logic |

In this scenario, AI might detect basic input validation issues but miss that, under certain conditions, a discount could be applied incorrectly, allowing unauthorized order approval.

Even advanced AI platforms can flag safe code as risky, creating false positives that slow down reviews. cubic’s approach to context-aware analysis significantly reduces these false positives.

Combining AI, static analysis, and human review for deeper coverage

AI-assisted tools are powerful, but they work best as part of a layered review strategy. Enterprise teams often integrate multiple verification methods to catch vulnerabilities that automated systems alone might miss:

1. Static analysis for surface-level issues

Tools like Checkstyle, PMD, SpotBugs, and FindBugs detect formatting issues, code smells, and common anti-patterns. They quickly catch violations of coding standards and highlight potential risks without requiring human context.

2. Automated tests for functional correctness and security

Unit, integration, and security-focused tests verify that code behaves as expected. They help catch logic errors, enforce workflow rules, and validate that edge cases are properly handled. Continuous integration pipelines ensure these tests run on every pull request.

3. AI-assisted security review for context awareness

A context-aware AI tool, cubic evaluates cross-module dependencies, business logic adherence, and historical patterns from previous PRs. It identifies potential high-risk areas that require human attention while filtering out low-value noise.

Combining AI-assisted tools with manual reviews ensures that subtle business logic flaws and multi-step vulnerabilities are caught more reliably. This highlights the differences between AI vs manual reviews in practice.

What are the common mistakes in secure code reviews, and how to avoid them?

Even with structured checklists and AI-assisted reviews, teams can fall into traps that let vulnerabilities slip through. Recognizing these pitfalls helps teams strengthen their security practices:

Over-reliance on automation: Many teams assume automated scans catch everything. While AI flags common issues, subtle business logic flaws, cross-module interactions, and context-specific risks often require human judgment.

Ignoring multi-module or cross-service impacts: Changes in one service or module can affect downstream systems. Reviews that focus only on the immediate code risk missing multi-step vulnerabilities or hidden side effects.

Delayed dependency checks: Waiting until late in the release cycle to review third-party libraries can introduce critical risks. Continuous monitoring of dependency health is essential.

Inconsistent documentation: Lack of context in review comments or missing explanations for architectural decisions can make future audits harder and obscure the rationale behind security decisions.

Focusing only on high-severity issues: Low- and medium-severity flaws often accumulate and create systemic vulnerabilities. A comprehensive approach ensures even subtle risks are addressed.

By combining a secure code review checklist, layered verification, and context-aware tools, teams can reduce these common pitfalls, catch both obvious and subtle vulnerabilities, and maintain consistent, reliable security standards.

AI code review platforms for security and dependency issues (2026)

Not all AI code review platforms have the same features. Some focus on surface-level security alerts, others on dependency checks, while a few combine context-aware insights with actionable fixes.

Having said that, let’s highlight key capabilities that matter for enterprise secure code reviews in 2026:

Platform | Strengths | Context Awareness | Security & Dependency Coverage | Workflow Integration | Pricing |

cubic | Context-aware AI reviews, highlights real risks, reduces false positives | High | Security vulnerabilities + dependency issues + multi-module logic | Pull-request native, integrates with CI/CD | Free; $24/month (annual) or $30/month |

CodeAnt AI | PR-native AI, SAST + dependency checks | Medium | Known vulnerabilities, basic context patterns | Pull-request integration | $10–$20 per user/month |

Snyk | Open-source dependency risk detection, license compliance | Low | Dependency-focused | Integrates with CI/CD | Free; Team ~$25/dev/month |

SonarQube | Static code quality analysis, some security rules | Low | Quality + baseline security | CI/CD and IDE integration | Free; Team ~$32/month |

Context-aware reviews: the key to secure code in 2026

Secure code review in modern enterprise environments requires more than signature-based scanning. AI tools are powerful for catching repetitive, predictable vulnerabilities, but context gaps remain. Multi-module interactions, workflow-specific logic, and configuration nuances can hide real risks.

By combining static analysis, automated testing, and context-aware AI-review tools like cubic, teams reduce false positives, surface actionable risks, and gain confidence that critical vulnerabilities are caught before deployment.

Request a demo to experience how cubic enhances your security reviews directly in pull requests and CI/CD.